(Updated 03/06/18 to include results for Blumlein Pair microphone arrangement.)

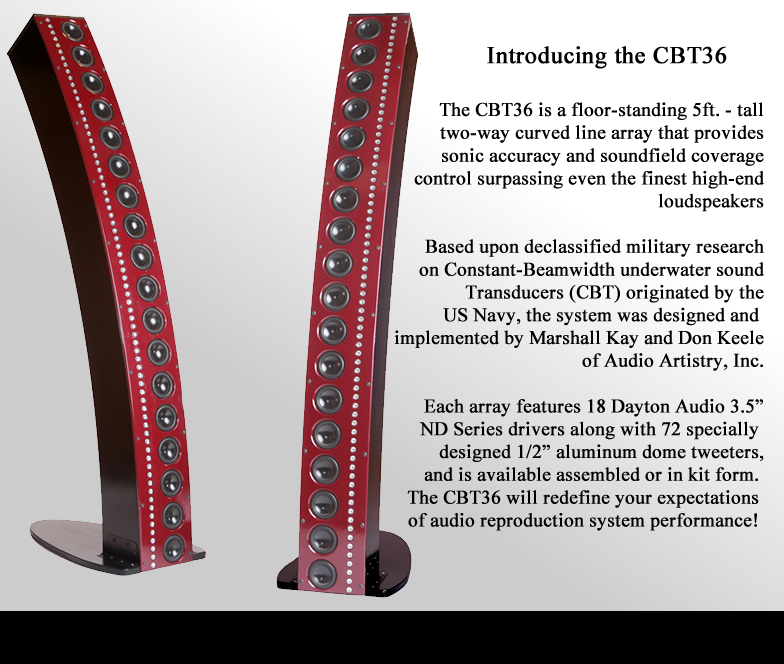

A computer simulation of stereo speakers plus listener, showing the listener’s perception of the directions of three sources that have previously been ‘recorded’. The original source positions are shown overlaid with the loudspeakers.

Ever since building DSP-based active speakers and hearing real stereo imaging effectively for the first time, it has seemed to me that ordinary stereo produces a much better effect than we might expect. In fact, it has intrigued me, and it has been hard to find a truly satisfactory explanation of how and why it works so well.

My experience of stereo audio is this:

- When sitting somewhere near the middle between two speakers and listening to a ‘purist’ stereo recording, I perceive a stable, compelling 3D space populated by the instruments and voices in different positions.

- The scene can occasionally extend beyond the speakers (and this is certainly the case with recordings made using Q-Sound and other such processes).

- Turning my head, the image stays plausible.

- If I move position, the image falls apart somewhat, but when I stop moving it stabilises again into a plausible image – although not necessarily resembling what I might have expected it to be prior to moving.

- If I move left or right, the image shifts in the direction of the speaker I am moving towards.

An article in Sound On Sound magazine may contain the most perceptive explanation I have seen:

The interaction of the signals from both speakers arriving at each ear results in the creation of a new composite signal, which is identical in wave shape but shifted in time. The time‑shift is towards the louder sound and creates a ‘fake’ time‑of‑arrival difference between the ears, so the listener interprets the information as coming from a sound source at a specific bearing somewhere within a 60‑degree angle in front.

This explanation is more elegant than the one that simply says that if the sound from one speaker is louder we will tend to hear it as if coming from that direction – I have always found it hard to believe that such a ‘blunt’ mechanism could give rise to a precise, sharp 3D image. Similarly, it is hard to believe that time-of-arrival differences on their own could somehow be relayed satisfactorily from two speakers unless the user’s head was locked into a fixed central position.

The Sound On Sound explanation says that by reproducing the sound from two spaced transducers that can reach both ears, the relative amplitude also controls the relative timing of what reaches the ears, thus giving a timing-based stereo image that, it appears, is reasonably stable with position and head rotation. This is not a psychoacoustic effect where volume difference is interpreted as a timing difference, but the literal creation of a physical timing difference from a volume difference.

There must be timbral distortion because of the mixing of the two separately-delayed renditions of the same impulse at each ear, but experience seems to suggest that this is either not significant or that the brain handles it transparently, perhaps because of the way it affects both ears.

Blumlein’s Patent

Blumlein’s original 1933 patent is reproduced here. The patent discusses how time-of-arrival may take precedence over volume-based cues depending on frequency content.

It is not immediately apparent to me that what is proposed in the patent is exactly what goes on in most stereo recordings. As far as I am aware, most ‘purist’ stereo recordings don’t exaggerate the level differences between channels, but simply record the straight signal from a pair of microphones. However, the patent goes on to make a distinction between “pressure” and “velocity” microphones which, I think, corresponds to omni-directional and directional microphones. It is stated that in the case of velocity microphones no amplitude manipulation may be needed. The microphones should be placed close together but facing in different directions (often called the ‘Blumlein Pair‘) as opposed to being spaced as “artificial ears”.

Blumlein Pair microphone arrangement

The Blumlein microphones are bi-directional i.e. they also respond to sound from the back.

Going by the SoS description, this type of arrangement would record no timing-based information (from the direct sound of the sources at any rate), just like ‘panpot stereo’, but the speaker arrangement would convert orientation-induced volume variations into a timing-based image derived from the acoustic summation of different volume levels via acoustic delays to each ear. This may be the brilliant step that turns a rather mundane invention (voices come from different sides of the cinema screen) into a seemingly holographic rendering of 3D space when played over loudspeakers.

Thus the explanation becomes one of geometry plus some guesswork regarding the way the ears and brain correlate what they are hearing, presumably utilising both time-of-arrival and the more prosaic volume-based mechanism which says that sounds closer to one ear than the other will be louder – enhanced by the shadowing effect of the listener’s head in the way. Is this sufficient to plausibly explain the brilliance of stereo audio? Does a stereo recording in any way resemble the space in which it was recorded?

A Computer Simulation

In order to help me understand what is going on I have created a computer simulation which works as follows (please skip this section unless you are interested in very technical details):

- It is a floor plan view of a 2D slice through the system. Objects can be placed at any XY location, measured in metres from an origin.

- There are no reflections; only direct sound.

- The system comprises

- a recording system:

-

a playback system:

-

The directions and distances from sources to microphones are calculated based on their relative positions, and from these the delays and attenuations of the signals at the microphones are derived. These signals are ‘recorded’.

-

During ‘playback’, the positions of the listener’s ears are calculated based on XY position of the head and its rotation.

-

The distances from speakers to each ear are calculated, and from these, the delays and attenuation thereof.

-

The composite signal from each source that reaches each ear via both speakers is calculated and from this is found:

- relative amplitude ratio at the ears

-

relative time-of-arrival difference at the ears. This is currently obtained by correlating one ear’s summed signal for that source (from both speakers) against the other and looking for the delay corresponding to peak output of this. (There may be methods more representative of the way human hearing ascertains time-of-arrival, and this might be part of a future experiment).

-

There is currently no attempt to simulate HRTF or the attenuating effect of ‘head shadow’. Attenuation is purely based on distance to each ear.

-

The system then simulates the signals that would arrive at each ear from a virtual acoustic source were the listener hearing it live rather than via the speakers.

-

This virtual source is swept through the XY space in fine increments and at each position the ‘real’ relative timings and volume ratio that would be experienced by the listener are calculated.

-

The results are compared to the results previously found for each of the three sources as recorded and played back over the speakers, and plotted as colour and brightness in order to indicate the position the listener might perceive the recorded sources as emanating from, and the strength of the similarity.

-

The listener’s location and rotation can be incremented and decremented in order to animate the display, showing how the system changes dynamically with head rotation or position.

The results are very interesting!

Here are some images from the system, plus some small animations.

Spaced omni-directional microphones

In these images, the (virtual) signal was picked up by a pair of (virtual) omnidirectional microphones on either side of the origin, spaced 0.3m apart. This is neither a binaural recording (which would at least have the microphones a little closer together) nor the Blumlein Pair arrangement, but does seem to be representative of some types of purist stero recording.

The positions of the three sources during (virtual) recording are shown overlaid with the two speakers, plus the listener’s head and ears. Red indicates response to SRC0; green SRC1; and blue SRC2.

Effect of head rotation on perceived direction of sources based on inter-aural timing when listener is close to the ‘sweet spot’.

Effect of side-to-side movement of listener on perceived imaging based on inter-aural timing.

Compound movement of listener, including front-to-back movement and head rotation.

Effect of listener movement on perceived image based on inter-aural amplitudes.

Coincident directional microphones (Blumlein Pair)

Here, directional microphones are set at the origin at right angles to each other, as shown in the earlier diagram. They copy Blumlein’s description in the patent i.e. output is proportional to the cosine of angle of incidence.

Time-of-arrival based perception of direction as captured by a coincident pair of directional microphones (Blumlein Pair) and played back over stereo speakers, with compound movement of the listener.

A similar test, but showing perceived locations of the three sources based on inter-aural volume level

In no particular order, some observations on the results:

- A stereo image based on time-of-arrival differences at the ears can be created with two spaced omni-directional microphones or coincident directional microphones. Note, the aim is not to ‘track’ the image with the user’s head movement (like headphones would), but to maintain stable positions in space even as the user turns away from ‘the stage’.

- The Blumlein Pair gives a stable image with listener movement based on time-of-arrival. The image based on inter-aural amplitude may not be as stable, however.

-

Interaural timing can only give a direction, not distance.

-

A phantom mirror image of equal magnitude also accompanies the frontwards time-of-arrival-derived direction, but this would also be true of ‘real life’. The way this behaves with dynamic head movement isn’t necessarily correct; at some locations and listener orientations maybe the listener could be confused by this.

-

Relative volume at the two ears (as a ratio) gives a ‘blunt’ image that behaves differently from the time-of-arrival based image when the listener moves or turns their head. The plot shows that the same ratio can be achieved for different combinations of distance and angle so on its own it is not unambiguous.

-

Even if the time-of-arrival image stays meaningful with listener movement, the amplitude-based image may not.

-

Combined with timing, relative interaural volume might provide some cues for distance (not necessarily the ‘true’ distance).

-

No doubt other cues combining indirect ‘ambient’ reflections in the recording, comb-filtering, dynamic phase shifts with head movement, head-related transfer function, etc. are also used by the listener and these all contribute to the perception of depth.

-

The cues may not all ‘hang together’, particularly in the situation of movement of the listener, but the human brain seems to make reasonable sense of them once the movement stops.

- The Blumlein Pair does, indeed, create a time-of-arrival-based image from amplitude variations, only. And this image is stable with movement of the listener – a truly remarkable result, I think.

- Choice of microphone arrangement may influence the sound and stability of the image.

- Maybe there is also an issue regarding the validity of different recording techniques when played back over headphones versus speakers. The Blumlein Pair gives no time-of-arrival cues when played over headphones.

- The audio scene is generally limited to the region between the two speakers.

- The simulation does not address ‘panpot’ stereo yet, although as noted earlier, the Blumlein microphone technique is doing something very similar.

- In fact, over loudspeakers, the ‘panpot’ may actually be the most correct way of artificially placing a source in the stereo field, yielding a stable, time-of-arrival-based position.

Perhaps the thing that I find most exciting is that the animations really do seem to reflect what happens when I listen to certain recordings on a stereo system and shift position while concentrating on what I am hearing. I think that the directions of individual sources do indeed sometimes ‘flip’ or become ambiguous, and sometimes you need to ‘lock on’ to the image after moving, and from then on it seems stable and you can’t imagine it sounding any other way. Time-of-arrival and volume-based cues (which may be in conflict in certain listening positions), as well as the ‘mirror image’ time-of-arrival cue may be contributing to this confusion. These factors may differ with signal content e.g. the frequency ranges it covers.

It has occurred to me that in creating this simulation I might have been in danger of shattering my illusions about stereo, spoiling the experience forever, but in the end I think my enthusiasm remains intact. What looked like a defect with loudspeakers (the acoustic cross-coupling between channels) turns out to be the reason why it works so compellingly.

In an earlier post I suggested that maybe plain stereo from speakers was the optimal way to enjoy audio and I think I am more firmly persuaded of that now. Without having to wear special apparatus, have one’s ears moulded, make sure one’s face is visible to a tracking camera, or dedicate a large space to a central hot-seat, one or several listeners can enjoy a semi-‘holographic’ rendering of an acoustic recording that behaves in a logical way even as the listener turns their head. The system blends the listening room’s acoustics with the recording meaning that there is a two-way element to the experience whereby listeners can talk and move around and remain connected with the recording in a subtle, transparent way.

Conclusion

Stereo over speakers produces a seemingly realistic three-dimensional ‘image’ that remains stable with listener movement. How this works is perhaps more subtle than is sometimes thought.

The Blumlein Pair microphone arrangement records no timing differences between left and right, but by listening over loudspeakers, the directional volume variations are converted into time-of-arrival differences at the listener’s ears. The acoustic cross-coupling from each speaker to ‘the wrong ear’ is a necessary factor in this.

Some ‘purist’ microphone techniques may not be as valid as others when it comes to stability of the image or the positioning of sources within the field. Techniques that are appropriate for headphones may not be valid for speakers, and vice versa.