As I mentioned earlier, I would like to have the flexibility of using digital audio data that emanates externally from the PC that is performing the DSP, and this necessarily will have a different sample clock. Something has got to give!

If the input was analogue, you would just sample it with an ADC locked to your DAC’s sample rate, and then the source’s own sample rate wouldn’t matter to you. With a standard digital audio source (e.g. S/PDIF) you need to be able to do the same thing but purely in software. The incoming sampled data points are notionally turned into a continuous waveform in memory by duplicating a DAC reconstruction filter using floating point maths. You can then sample it wherever you want at a rate locked to the DAC’s sample rate.

You still ‘eat’ the incoming data at the rate at which it comes in, but you vary the number of samples that you ‘decimate’ from it (very, very slightly).

The control algorithm for locking this re-sampling to the DAC’s sample rate is not completely trivial because the PC’s only knowledge of the sample rates of the DAC and S/PDIF are via notifications that large chunks of data have arrived or left, with unknown amounts of jitter. It is only possible to establish an accurate measure of relative sample rates with a very long time constant average. In reality the program never actually calculates the sample rate at all, but merely maintains a constant-ish difference between the read and write pointer positions of a circular buffer. It relies on adequate latency and the two sample rates being reasonably stable by virtue of being derived from crystal oscillators. The corrections will, in practice be tiny and/or occasional.

How is the interesting problem of re-sampling solved?

In order to experiment with it I have created a program that runs on a PC and does the following:

- Synthesises a test signal as an array of floating point values at a notional sample rate of 44.1 kHz. This can be a sine wave, or combination of different frequency sine waves.

- Plots the incoming waveform as time domain dots.

- Plots the waveform as it would appear when reconstructed with the sinc filter. This is a sanity check that the filter is doing approximately the right thing.

- Resamples the data at a different sample rate (can be specified with any arbitrary step size e.g. 0.9992 or 1.033 or whatever), using floating point maths. The method can be nearest-neighbour, linear interpolation, or sinc & linear interpolation.

- Plots the resampled waveform as time domain dots.

- Passes the result into a FFT (65536 points), windowing the data with a raised cosine window.

- Plots the resulting resampled spectrum in terms of frequency and amplitude in dB.

This is an ideal test bed for experimenting with different algorithms and getting a feel for how accurate they are.

Nearest-neighbour and linear interpolation are pretty self explanatory methods; the sinc method is similar to that described here:

https://www.dsprelated.com/freebooks/pasp/Windowed_Sinc_Interpolation.html

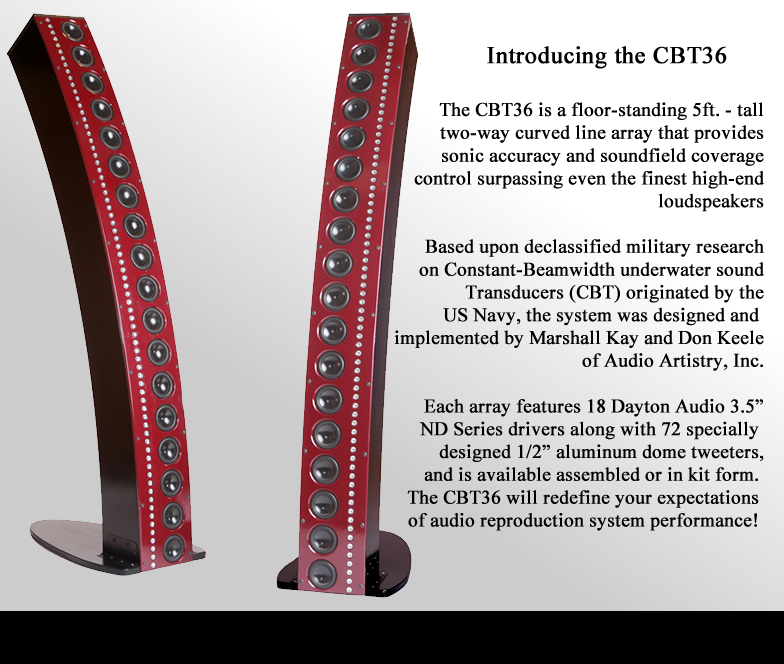

I haven’t completely reproduced their method, but I was inspired by this image:

![\includegraphics[scale=0.8]{eps/Waveforms}](https://i0.wp.com/www.dsprelated.com/josimages_new/pasp/img1161.png)

The sinc function is the ideal ‘brick wall’ low pass filter and is calculated as sin(x*PI)/(x*PI). In theory it extends from minus to plus infinity, but for practical uses is windowed so that it tapers to zero at plus or minus the desired width – which should be as wide as practical.

The filter can be set at a lower cutoff frequency than Nyquist by stretching it out horizontally, and this would be necessary to avoid aliasing if wishing to re-sample at an effectively slower sample rate.

If the kernel is slid along the incoming sample points and a point-by-point multiply and sum is performed, the result is the reconstructed waveform. What the above diagram shows is that the kernel can be in the form of discrete sampled points, calculated as the values they would be if the kernel was centred at any arbitrary point.

So resampling is very easy: simply synthesise a sinc kernel in the form of sampled points based on the non-integer position you want to reconstruct, and multiply-and-add all the points corresponding to it.

A complication is the necessity to shorten the filter to a practical length, which involves windowing the filter i.e. multiplying it by a smooth function that tapers to zero at the edges. I did previously mention the Lanczos kernel which apparently uses a widened copy of the central lobe of the sinc function as the window. But looking at it, I don’t know why this is supposed to be a good window function because it doesn’t taper gradually to zero, and at non-integer sample positions you would either have to extend it with zeroes abruptly, or accept non-zero values at its edges.

Instead, I have decided to use a simple raised cosine as the windowing function, and to reduce its width slightly to give me some leeway in the kernel’s position between input samples. At the extremities I ensure it is set to zero. It seems to give a purer output than my version of the Lanczos kernel.

Pre-calculating the kernel

Although very simple, calculating the kernel on-the-fly at every new position would be extremely costly in terms of computing power, so the obvious solution is to use lookup tables. The pre-calculated kernels on either side of the desired sample position are evaluated to give two output values. Linear interpolation can then be used to find the value at the exact position. Because memory is plentiful in PCs, there is no need to skimp on the number of pre-calculated kernels – you could use a thousand of them. For this reason, the errors associated with this linear interpolation can be reduced to negligible.

The horizontal position of the raised cosine window follows the position of the centre of the kernel for all the versions that are calculated to lie in between the incoming sample points.

All that remains is to decide how wide the kernel needs to be for adequate accuracy in the reconstruction – and this is where my demo program comes in. I apologise that there now follows a whole load of similar looking graphs, demonstrating the results with various signals and kernel sizes, etc.

1 kHz sine wave

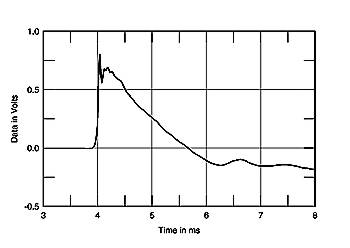

First we can look at the standard test signal: a 1 kHz sine wave. In the following image, the original sine wave points are shown joined with straight lines at the top right, followed by how the points would look when emerging from a DAC that has a sinc-based reconstruction filter (in this case, the two images look very similar).

Next down in the three time domain waveforms comes the resampled waveform after we have resampled it to shift its frequency by a factor of 0.9 (a much larger ratio than we will use in practice). In this first example, the resampling method being used is ‘nearest neighbour’. As you can see, the results are disastrous!

1kHz sine wave, frequency shift 0.9, nearest neighbour interpolation

![]() The discrete steps in the output waveform are obvious, and the FFT shows huge spikes of distortion.

The discrete steps in the output waveform are obvious, and the FFT shows huge spikes of distortion.

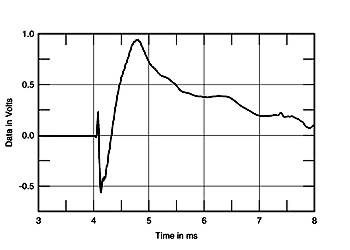

Linear interpolation is quite a bit better in terms of the FFT, and the time domain waveform at the bottom right looks much better.

1kHz sine wave, frequency shift 0.9, linear interpolation

However, the FFT magnitude display reveals that it is clearly not ‘hi-fi’.

Now, compare the results using sinc interpolation:

1kHz sine wave, frequency shift 0.9, sinc interpolation, kernel width 50

As you can see, the FFT plot is absolutely clean, indicating that this result is close to distortion-free.

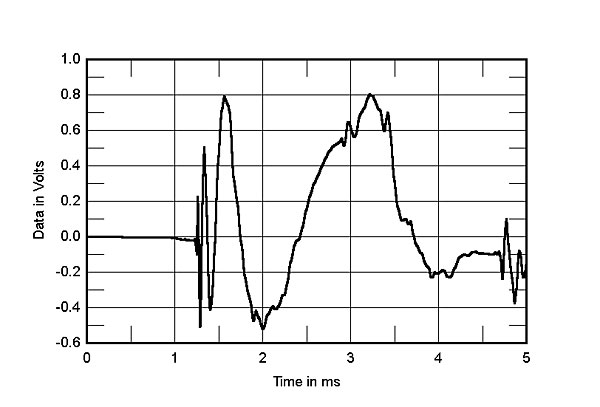

Next we can look at something very different: a 20 kHz sine wave.

20 kHz sine wave

20 Khz sine wave, frequency shift 0.9, nearest neighbour interpolation

With nearest neighbour resampling, the results are again disastrous. At the right hand side, though, the middle of the three time domain plots shows something very interesting: even though the discrete points look nothing like a sine wave at this frequency, the reconstruction filter ‘rings’ in between the points, producing a perfect sine wave with absolutely uniform amplitude. This is what is produced by any normal DAC – and is something that most people don’t realise; they often assume that digital audio falls apart at the top end, but it doesn’t: it is perfect.

Linear interpolation is better than nearest-neighbour, but pretty much useless for our purposes.

20kHz sine wave, frequency shift 0.9, linear interpolation

Sinc interpolation is much better!

20kHz sine wave, frequency shift 0.9, sinc interpolation, kernel size 50

However, there is an unwanted spike at the right hand side (note the main signal is at 18 kHz because it has been shifted down by a factor of 0.9). This spike appears because of inadequate width of the sinc kernel which in this case has been set at 50 (with 500 pre-calculated versions of it with different time offsets, between sample points).

If we increase the width of the kernel to 200 (actually 201 because the kernel is always symmetrical about a central point with value 1.0), we get this:

20kHz sine wave, frequency shift 0.9, sinc interpolation, kernel size 200

![]() The spike is almost at acceptable levels. Increasing the width to 250 we get this:

The spike is almost at acceptable levels. Increasing the width to 250 we get this:

20 kHz sine wave, frequency shift 0.9, sinc interpolation, kernel size 250

And at 300 we get this:

20 kHz sine wave, frequency shift 0.9, sinc interpolation, kernel size 300

Clearly the kernel width does need to be in this region for the highest quality.

For completeness, here is the system working on a more complex waveform comprising the sum of three frequencies: 14, 18 and 19 kHz, all at the same amplitude and a frequency shift of 1.01.

14 kHz, 18 kHz, 19 kHz sum

Nearest neighbour:

14, 18, 19 kHz sine wave, nearest neighbour interpolation

![]() Linear interpolation:

Linear interpolation:

- 14, 18, 19 kHz sine wave, linear interpolation

Sinc interpolation with a kernel width of 50:

14, 18, 19 kHz sine wave, sinc interpolation, kernel width 50

Kernel width increased to 250:

- 14, 18, 19 kHz sine wave, sinc interpolation, kernel width 250

More evidence that the kernel width needs to be in this region.

Ready made solutions

Re-sampling is often done in dedicated hardware like Analog Devices’ AD1896. Some advanced sound cards like the Creative X-Fi can re-sample everything internally to a common sample rate using powerful dedicated processors – this is the solution that makes connecting digital audio sources together almost as simple as analogue.

In theory, stuff like this goes on inside Linux already, in systems like JACK – apparently. But it just feels too fragile: I don’t know how to make sure it is working, and I don’t really have any handle on the quality of it. This is a tricky problem to solve by trial-and-error because a system can run for ages without any sign that clocks are drifting.

In Windows, there is a product called “Virtual Audio Cable” that I know performs re-sampling using methods along these lines.

There are libraries around that supposedly can do resampling, but the quality is unknown – I was looking at one that said “Not the best quality” so I gave up on that one.

I have a feeling that much of the code was developed at a time when processors were much less powerful than they are now and so the algorithms are designed for economy rather than quality.

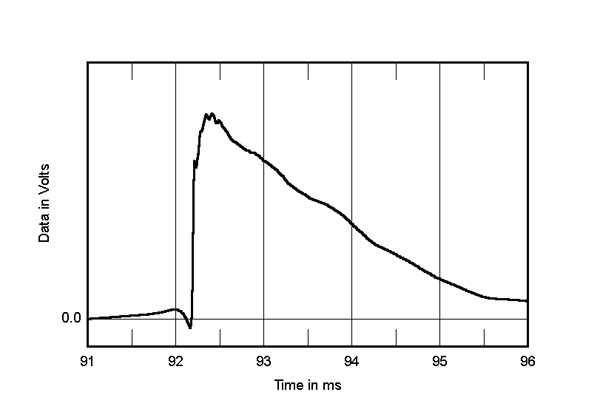

Software-based sinc resampling in practice

I have grafted the code from my demo program into my active crossover application and set it running with TOSLink from a CD player going into a cheap USB sound card (Maplin) which my software uses to acquire the stream, and my software’s output going to a better multichannel sound card (the Xonar U7). The TOSLink data is being resampled in order to keep it aligned with the U7’s sample rate. I have had it running for 20 hours without incident.

Originally, before developing the test bed program, I set the kernel size at 50, fearing that anything larger would stress the Intel Atom CPU. However, I now realise that a width of at least 250 is necessary, so with trepidation I upped it to this value. The CPU load trace went up a bit in the Ubuntu system monitor, but not much; the cores are still running cool. The power of modern CPUs is ridiculous!! Remember that for each of the two samples arriving at 44.1 kHz, the algorithm is performing 500 floating point multiplications and sums, yet it hardly breaks into a sweat. There are absolutely no clever efficiencies in the programming. Amazing.

![\includegraphics[scale=0.8]{eps/Waveforms}](https://i0.wp.com/www.dsprelated.com/josimages_new/pasp/img1161.png)

There’s an amazing

There’s an amazing